⇧ amazing...

PythonライブラリのelasticsearchでCA証明書がエラーになるんだが...

Elasticsearchについて

⇧ 上記の記事の時に、Elasticsearchで用意されているelasticsearch-certutilってコマンドでCA証明書を作成したのだけど、Pythonライブラリのelasticsearchでca_certsに指定するCA証明書でエラーが発生してしまう...

Starting new HTTPS connection (1): 10.255.255.16:9200

HEAD https://10.255.255.16:9200/tweepy_twitter_api_v2 [status:N/A duration:0.011s]

Node <Urllib3HttpNode(https://10.255.255.16:9200)> has failed for 1 times in a row, putting on 1 second timeout

Retrying request after failure (attempt 0 of 3)

Traceback (most recent call last):

File "/usr/local/lib/python3.8/dist-packages/elastic_transport/_transport.py", line 329, in perform_request

meta, raw_data = node.perform_request(

File "/usr/local/lib/python3.8/dist-packages/elastic_transport/_node/_http_urllib3.py", line 199, in perform_request

raise err from None

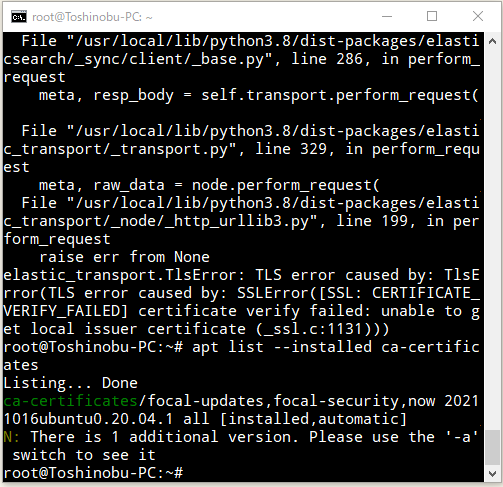

elastic_transport.TlsError: TLS error caused by: SSLError([SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed: unable to get local issuer certificate (_ssl.c:1131))

Starting new HTTPS connection (2): 10.255.255.16:9200

HEAD https://10.255.255.16:9200/tweepy_twitter_api_v2 [status:N/A duration:0.009s]

Node <Urllib3HttpNode(https://10.255.255.16:9200)> has failed for 2 times in a row, putting on 2 second timeout

Retrying request after failure (attempt 1 of 3)

Traceback (most recent call last):

File "/usr/local/lib/python3.8/dist-packages/elastic_transport/_transport.py", line 329, in perform_request

meta, raw_data = node.perform_request(

File "/usr/local/lib/python3.8/dist-packages/elastic_transport/_node/_http_urllib3.py", line 199, in perform_request

raise err from None

elastic_transport.TlsError: TLS error caused by: SSLError([SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed: unable to get local issuer certificate (_ssl.c:1131))

Starting new HTTPS connection (3): 10.255.255.16:9200

HEAD https://10.255.255.16:9200/tweepy_twitter_api_v2 [status:N/A duration:0.009s]

Node <Urllib3HttpNode(https://10.255.255.16:9200)> has failed for 3 times in a row, putting on 4 second timeout

Retrying request after failure (attempt 2 of 3)

Traceback (most recent call last):

File "/usr/local/lib/python3.8/dist-packages/elastic_transport/_transport.py", line 329, in perform_request

meta, raw_data = node.perform_request(

File "/usr/local/lib/python3.8/dist-packages/elastic_transport/_node/_http_urllib3.py", line 199, in perform_request

raise err from None

elastic_transport.TlsError: TLS error caused by: SSLError([SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed: unable to get local issuer certificate (_ssl.c:1131))

Starting new HTTPS connection (4): 10.255.255.16:9200

HEAD https://10.255.255.16:9200/tweepy_twitter_api_v2 [status:N/A duration:0.012s]

Node <Urllib3HttpNode(https://10.255.255.16:9200)> has failed for 4 times in a row, putting on 8 second timeout

Traceback (most recent call last):

File "twitter_tweepy.py", line 59, in <module>

if es.indices.exists(index=elastic_index) == False:

File "/usr/local/lib/python3.8/dist-packages/elasticsearch/_sync/client/utils.py", line 414, in wrapped

return api(*args, **kwargs)

File "/usr/local/lib/python3.8/dist-packages/elasticsearch/_sync/client/indices.py", line 1111, in exists

return self.perform_request( # type: ignore[return-value]

File "/usr/local/lib/python3.8/dist-packages/elasticsearch/_sync/client/_base.py", line 390, in perform_request

return self._client.perform_request(

File "/usr/local/lib/python3.8/dist-packages/elasticsearch/_sync/client/_base.py", line 286, in perform_request

meta, resp_body = self.transport.perform_request(

File "/usr/local/lib/python3.8/dist-packages/elastic_transport/_transport.py", line 329, in perform_request

meta, raw_data = node.perform_request(

File "/usr/local/lib/python3.8/dist-packages/elastic_transport/_node/_http_urllib3.py", line 199, in perform_request

raise err from None

elastic_transport.TlsError: TLS error caused by: TlsError(TLS error caused by: SSLError([SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed: unable to get local issuer certificate (_ssl.c:1131)))

⇧ う~む、分からん...

⇧ 上記サイト様を参考に、確認してみたところ、

⇧ ca-certificatesはインストールされとるから、別解を探す必要がありますな。

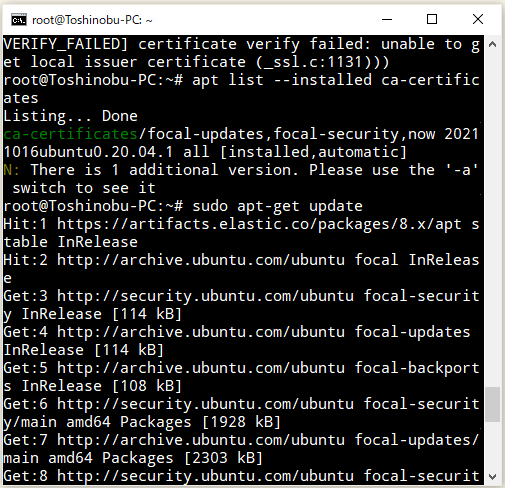

⇧ 上記サイト様を参考に、更新してみる。

sudo apt-get update sudo apt --only-upgrade install ca-certificates

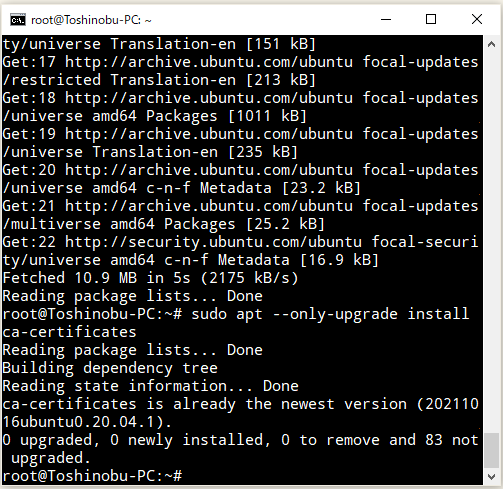

駄目でした...

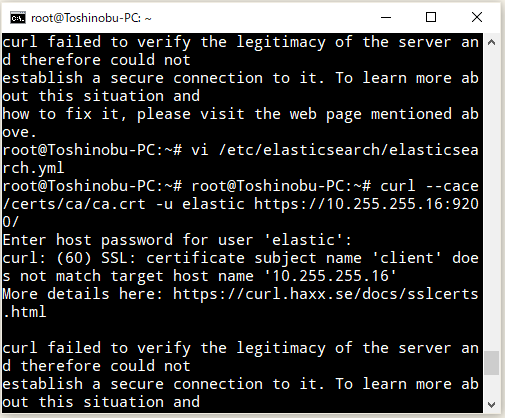

curlコマンドでも同様のエラー。

どうやら、

⇧ 上記サイト様によりますと、証明書が間違ってる可能性があるらしい。

⇧ 上記サイト様によりますと、/etc/elasticsearch/elasticsearch.ymlで設定した証明書を指定すれば良いらしい。

ただ、Pythonのelasticsearchのドキュメントだと、

# Create the client instance

client = Elasticsearch(

"https://localhost:9200",

ca_certs="/path/to/http_ca.crt",

basic_auth=("elastic", ELASTIC_PASSWORD)

)

⇧ ってなってるんだけどね...ドキュメントが適当過ぎるな...

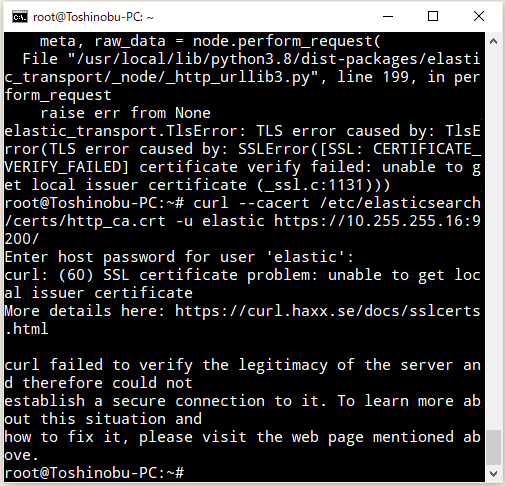

で、/etc/elasticsearch/elasticsearch.ymlで設定した証明書で試してみたけど、

⇧ どっちにしろ、エラーなんだが...

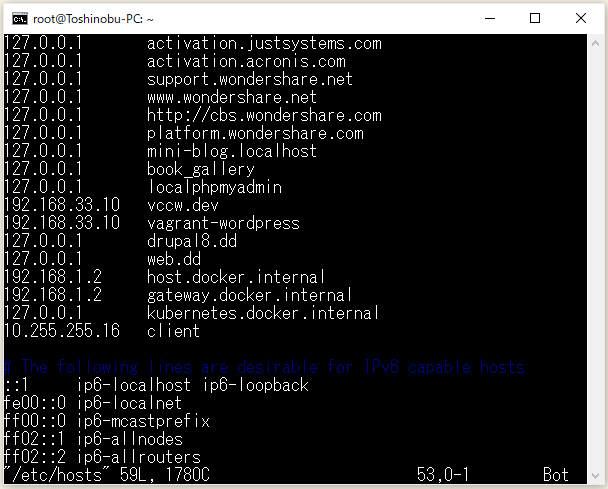

⇧ 上記サイト様によりますと、/etc/hostsとネットワークの設定が必要らしい。

⇧ Ubuntu 20.04は、ネットワークの設定のファイルは新規で作る必要があるようです。

ただ、自分の環境ではネットワークの設定ファイルを作成しなくても解決できました。

/etc/hostsファイルに、エラーに出ていた、「host name」と「subject name」の組み合わせを追加しました。

WSL 2(Windows SubSystem for Linux 2)を使っている場合は、

⇧ /etc/hostsファイルが初期化されないように、/etc/wls.confへ設定を追加するようにしておきましょう。

あとは、WSL 2(Windows SubSystem for Linux 2)でUbuntuを起動する際に、

⇧ IPアドレスを追加する必要がありますかね。

で、Pythonのコードを修正。

import tweepy

import sys

import json

from textwrap import TextWrapper

from datetime import datetime

from elasticsearch import Elasticsearch

#from tweepy import stream

from logging import StreamHandler, Formatter

from logging import INFO, DEBUG, NOTSET

import logging

from datetime import datetime

import certifi

stream_handler = StreamHandler()

stream_handler.setLevel(DEBUG)

stream_handler.setFormatter(Formatter("%(message)s"))

logging.basicConfig(level=NOTSET, handlers=[stream_handler])

logger = logging.getLogger(__name__)

#consumer_key="CONSUMER_KEY_GOES_HERE"

#consumer_secret="CONSUMER_SECRET_GOES_HERE"

#access_token="ACCESS_TOKEN_GOES_HERE"

#access_token_secret="ACCESS_TOKEN_SECRET_GOES_HERE"

bearer_token="BEARER_TOKEN_GOES_HERE"

#auth = tweepy.OAuthHandler(consumer_key, consumer_secret)

#auth.set_access_token(access_token, access_token_secret)

es = Elasticsearch(

[

{

'host':"client",

'port':9200,

'scheme': "https"

}

],

basic_auth=('elastic', 'password'),

verify_certs=True,

#ca_certs=certifi.where()

ca_certs="/etc/elasticsearch/certs/ca/ca.crt"

#ssl_assert_fingerprint='FINGER_PRINT_GOES_HERE'

#client_cert="/etc/elasticsearch/certs/ca/ca.crt",

#client_key="/etc/elasticsearch/certs/ca/ca.key"

)

elastic_index = "tweepy_twitter_api_v2"

mappings = {

"properties": {

"tweet_text": {"type": "text", "analyzer": "standard"},

"created_at": {"type": "text", "analyzer": "standard"},

"user_id": {"type": "text", "analyzer": "standard"},

"user_name": {"type": "text", "analyzer": "standard"},

"tweet_body": {"type": "text", "analyzer": "standard"}

}

}

if es.indices.exists(index=elastic_index) == False:

es.indices.create(index=elastic_index, mappings=mappings)

class StreamListener(tweepy.StreamingClient):

status_wrapper = TextWrapper(width=60, initial_indent=' ', subsequent_indent=' ')

def on_status(self, status):

try:

current_date = datetime.utcnow().strftime('%Y%m%d%H%M%S%f')

#print 'n%s %s' % (status.author.screen_name, status.created_at)

data = {

'tweet_text': status.text,

'created_at': str(status.created_at),

'user_id': status.user.id,

'user_name': status.user.name,

'tweet_body': json.dump(status._json)

}

logger.info("on_status")

logger.info(data)

#print json_data['text']

es.index(

index=elastic_index,

id="tweet_" + current_date,

document=data

)

logger.info("es.index success")

except Exception as e:

logger.error(e)

pass

#streamer = tweepy.Stream(auth=auth, listener=StreamListener(), timeout=3000000000 )

#streamer = StreamListener(

# consumer_key,

# consumer_secret,

# access_token,

streamer = StreamListener(bearer_token=bearer_token, max_retries=3)

#Fill with your own Keywords bellow

terms = '2023'

streamer.add_rules(tweepy.StreamRule(terms))

logger.info("start streamer.filter")

streamer.filter()

#streamer.userstream(None)

streamer.disconnect()

で、保存して、実行できました。

Pythonライブラリのelasticsearchのドキュメントが分かり辛い...

毎度モヤモヤ感が半端ない...

今回はこのへんで。