⇧ Oh, my gosh...

VagrantでKubernetsの環境をオンプレミス環境に構築したいのだが...

相も変わらず、オンプレミス環境でKubernetesを構築する情報が少ないのですが、

⇧ 公式のドキュメントによると、「kind」は紹介されておらず、

- kubeadm

- Kubernetes Cluster API

- kOps

- kubespray

の4つの導入方法があるらしいですと。

「kubeadm」による導入方法は、

⇧ 上記のドキュメントがあるのだけど、Kubernetes環境構築に必要な情報が分り辛い...

「Vagrant」と「Ansible」を利用する場合、

⇧ ユーザーの問題があると。

「Ansible」は、

⇧ ファイルの権限の問題もありますと。

VagrantとVirtualBoxが安定しなさ過ぎるんだが...

とりあえず、

のどちらについても、不具合が多過ぎて環境構築が全くスムーズにいかない...

環境に依存しないのが目的のはずなんだが、正常に動作させるための条件がブラックボックス過ぎて、実用に耐えない感じになっているのが誠に遺憾ではある...

wingetでインストールしたのだけど、

| No | ソフトウェア | バージョン |

|---|---|---|

| 1 | Vagrant | 2.4.3 |

| 2 | VirtualBox | 7.1.4 |

上記のようなバージョンを利用する形になったのだが、対応している「Linux ディストリビューション」のバージョンが分り辛い...

というか、エラーになって利用できないものが多過ぎて、最早、メンテナンスがされていないのではないかという気がしてならない。

そもそも、「Vagrant」で利用可能な「BOXファイル」をコマンドで確認できないのが残念過ぎる...

そして、Windowsをホストとした場合のVagrantfileのサンプルが公式のドキュメントに無いのが辛い...

⇧ Windows環境については、全く考慮してくれていない...

雰囲気で設定するしかないってカオス過ぎるんだが...

VagrantとVirtualBoxでAnsibleをゲストOSにインストールしKubernetes環境を構築したかったが...

ちょっと情報が古いのだけど、

⇧ 公式のブログでAnsibleとVagrantで構築する情報があるのだけど、「ホスト側」と「ゲスト側」のどちらに何が必要なのかが分り辛い...

何故、要件を整理しないのかサッパリ理解できない...

ホスト側のWindows 10 Homeに以下のファイルを用意しておく。

vagrant/ ├── Vagrantfile # Vagrantの設定ファイル ├── ansible/ │ ├── ansible.cfg # Ansible の設定ファイル │ ├── inventory.ini # 対象マシンのIPアドレスなど │ └── k8s-setup.yml # Ansibleプレイブック(Kubernetesセットアップ用) ├── k8s-manifests/ # Kubernetesマニフェストファイル │ ├── awx-deployment.yml # AWXのKubernetesデプロイメント │ └── squid-deployment.yml # SquidのKubernetesデプロイメント └── docker-compose.yml # ワーカーノード2用のdocker-compose設定

で、諸々のバグのせいで、ファイルの内容の設定が遅々として進まないのだが...

情報が錯綜し過ぎていて、正しい情報が不明ということもあって、最終的に1週間ぐらいかかったのだが、以下のような内容になりました。

■D:\work-soft\vagrant\ansible\ansible.cfg

[defaults] host_key_checking = False

■D:\work-soft\vagrant\ansible\inventory.ini

[master] 192.168.50.10 ansible_user=vagrant ansible_ssh_private_key_file=/home/vagrant/.ssh/id_rsa [worker1] 192.168.50.11 ansible_user=vagrant ansible_ssh_private_key_file=/home/vagrant/.ssh/id_rsa [worker2] 192.168.50.12 ansible_user=vagrant ansible_ssh_private_key_file=/home/vagrant/.ssh/id_rsa [workers:children] worker1 worker2 [all:children] master workers

■D:\work-soft\vagrant\ansible\k8s-setup.yml

---

- hosts: all

become: yes

tasks:

- name: Install required packages

apt:

name:

- apt-transport-https

- ca-certificates

- curl

- gpg

state: present

update_cache: yes

- name: Download Google Cloud public signing key

shell: curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.32/deb/Release.key | gpg --dearmor -o /usr/share/keyrings/kubernetes-archive-keyring.gpg

- name: Add Kubernetes apt repository

shell: echo 'deb [signed-by=/usr/share/keyrings/kubernetes-archive-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.32/deb/ /' | tee /etc/apt/sources.list.d/kubernetes.list

- name: Update apt package index

apt:

update_cache: yes

- name: Install Docker

apt:

name: docker.io

state: present

update_cache: yes

- name: Install Kubernetes packages

apt:

name:

- kubeadm

- kubelet

- kubectl

state: present

update_cache: yes

- name: Hold Kubernetes packages

command: apt-mark hold kubeadm kubelet kubectl

- name: Start Docker service

service:

name: docker

state: started

enabled: true

- name: Start kubelet service

systemd:

name: kubelet

enabled: yes

state: started

- name: Disable swap

shell: |

swapoff -a

sed -i '/swap/d' /etc/fstab

- hosts: master

become: yes

tasks:

- name: Initialize Kubernetes master node

shell: kubeadm init --pod-network-cidr=10.244.0.0/16

register: kubeadm_output

ignore_errors: yes

- name: Print kubeadm init logs for debugging

debug:

var: kubeadm_output.stderr_lines

- name: Save the kubeadm join command for workers

set_fact:

join_command: "{{ kubeadm_output.stdout | regex_search('kubeadm join .*') }}"

- name: Set up kubeconfig for kubectl

shell: |

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

when: kubeadm_output.rc == 0

- name: Wait for Kubernetes API server to be ready

shell: |

until kubectl get nodes; do sleep 10; done

when: kubeadm_output.rc == 0

- name: Apply Flannel CNI plugin

shell: kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

when: kubeadm_output.rc == 0

- hosts: workers

become: yes

tasks:

- name: Join Kubernetes cluster

shell: |

export node_ip={{ ansible_host }} && {{ hostvars['master'].join_command }}

when: hostvars['master'].join_command is defined

- name: Generate AWX and Squid Pods on worker1

shell: |

kubectl apply -f /home/vagrant/k8s-manifests/awx-deployment.yml

kubectl apply -f /home/vagrant/k8s-manifests/squid-deployment.yml

when: inventory_hostname == 'worker1'

- name: Set up Docker Compose on worker2

shell: |

cd /home/vagrant/docker-compose

docker-compose up -d

when: inventory_hostname == 'worker2'

■D:\work-soft\vagrant\k8s-manifests\awx-deployment.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: awx

spec:

replicas: 1

selector:

matchLabels:

app: awx

template:

metadata:

labels:

app: awx

spec:

containers:

- name: awx

image: ansible/awx:latest

ports:

- containerPort: 80

■D:\work-soft\vagrant\k8s-manifests\squid-deployment.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: squid

spec:

replicas: 1

selector:

matchLabels:

app: squid

template:

metadata:

labels:

app: squid

spec:

containers:

- name: squid

image: sameersbn/squid:latest

ports:

- containerPort: 3128

■D:\work-soft\vagrant\docker-compose.yml

version: "3.7"

services:

kubectl:

image: bitnami/kubectl:latest

container_name: kubectl

volumes:

- /vagrant/k8s-manifests:/workspace

entrypoint: "/bin/bash"

tty: true

k3s:

image: rancher/k3s:v1.25.6-k3s1

container_name: k3s

command: server --no-deploy=traefik

volumes:

- /var/lib/rancher/k3s:/var/lib/rancher/k3s

ports:

- "6443:6443"

environment:

- K3S_KUBEVIRT_VERSION=v0.37.1

restart: always

■D:\work-soft\vagrant\Vagrantfile

Vagrant.configure("2") do |config|

# 仮想マシンのOS

config.vm.box = "bento/ubuntu-24.04"

config.vm.box_version = "202404.26.0"

# タイムアウトの増加

config.vm.boot_timeout = 900

# common ssh-private-key

config.ssh.insert_key = false

config.ssh.private_key_path = "C:/Users/toshinobu/.vagrant.d/insecure_private_key"

# copy to private-key

config.vm.provision "file", source: "C:/Users/toshinobu/.vagrant.d/insecure_private_key", destination: "/home/vagrant/.ssh/id_rsa"

config.vm.provision "shell", privileged: false, inline: <<-SHELL

chmod 600 /home/vagrant/.ssh/id_rsa

SHELL

# ワーカーノード1の設定

config.vm.define "worker1" do |worker1|

# 仮想マシンのプロバイダ設定

worker1.vm.provider "virtualbox" do |vb|

vb.memory = "1024"

vb.cpus = 1

end

worker1.vm.network "private_network", type: "static", ip: "192.168.50.11"

worker1.vm.hostname = "worker1"

# フォルダの同期(パーミッション設定を含む)

worker1.vm.synced_folder "./ansible", "/home/vagrant/ansible", type: "virtualbox", create: true, mount_options: ["dmode=775", "fmode=664"]

worker1.vm.synced_folder "./k8s-manifests", "/home/vagrant/k8s-manifests", type: "virtualbox", create: true, mount_options: ["dmode=775", "fmode=664"]

# # SSHキーの受け取り

# worker1.vm.provision "shell", privileged: true, inline: <<-SHELL

# mkdir -p /home/vagrant/.ssh

# chmod 700 /home/vagrant/.ssh

# [ ! -f /home/vagrant/.ssh/authorized_keys ] && touch /home/vagrant/.ssh/authorized_keys

# chmod 600 /home/vagrant/.ssh/authorized_keys

# SHELL

end

# ワーカーノード2の設定

config.vm.define "worker2" do |worker2|

# 仮想マシンのプロバイダ設定

worker2.vm.provider "virtualbox" do |vb|

vb.memory = "1024"

vb.cpus = 1

end

worker2.vm.network "private_network", type: "static", ip: "192.168.50.12"

worker2.vm.hostname = "worker2"

# フォルダの同期(パーミッション設定を含む)

worker2.vm.synced_folder "./ansible", "/home/vagrant/ansible", type: "virtualbox", create: true, mount_options: ["dmode=775", "fmode=664"]

worker2.vm.synced_folder "./k8s-manifests", "/home/vagrant/k8s-manifests", type: "virtualbox", create: true, mount_options: ["dmode=775", "fmode=664"]

worker2.vm.provision "file", source: "./docker-compose.yml", destination: "/home/vagrant/docker-compose/docker-compose.yml"

# # SSHキーの受け取り

# worker2.vm.provision "shell", privileged: true, inline: <<-SHELL

# mkdir -p /home/vagrant/.ssh

# chmod 700 /home/vagrant/.ssh

# [ ! -f /home/vagrant/.ssh/authorized_keys ] && touch /home/vagrant/.ssh/authorized_keys

# chmod 600 /home/vagrant/.ssh/authorized_keys

# SHELL

end

# マスターノードの設定

config.vm.define "master" do |master|

# 仮想マシンのプロバイダ設定

master.vm.provider "virtualbox" do |vb|

vb.memory = "2048"

vb.cpus = 2

end

master.vm.network "private_network", type: "static", ip: "192.168.50.10"

master.vm.hostname = "master"

# フォルダの同期(パーミッション設定を含む)

master.vm.synced_folder "./ansible", "/home/vagrant/ansible", type: "virtualbox", create: true, mount_options: ["dmode=775", "fmode=664"]

master.vm.synced_folder "./k8s-manifests", "/home/vagrant/k8s-manifests", type: "virtualbox", create: true, mount_options: ["dmode=775", "fmode=664"]

master.vm.provision "shell", privileged: true, inline: <<-SHELL

# # Ansibleの対象マシンに接続するためのSSHキー生成

# ssh-keygen -t rsa -b 2048 -f /home/vagrant/.ssh/id_rsa -q -N ""

# Ansible設定(ホスト鍵検証無効化)

echo "[defaults]" > /home/vagrant/ansible/ansible.cfg

echo "host_key_checking = False" >> /home/vagrant/ansible/ansible.cfg

sudo chmod -R 755 /home/vagrant/ansible

sudo apt-get install -y sshpass ansible bash-completion

# # Ansibleの対象マシンに公開鍵を登録する

# for ip in 192.168.50.11 192.168.50.12; do

# sshpass -p "vagrant" ssh-copy-id -i /home/vagrant/.ssh/id_rsa.pub -o StrictHostKeyChecking=no vagrant@$ip

# done

cd /home/vagrant/ansible

# Ansibleのplaybookを実行する

ansible-playbook -i inventory.ini k8s-setup.yml -u vagrant

SHELL

end

end

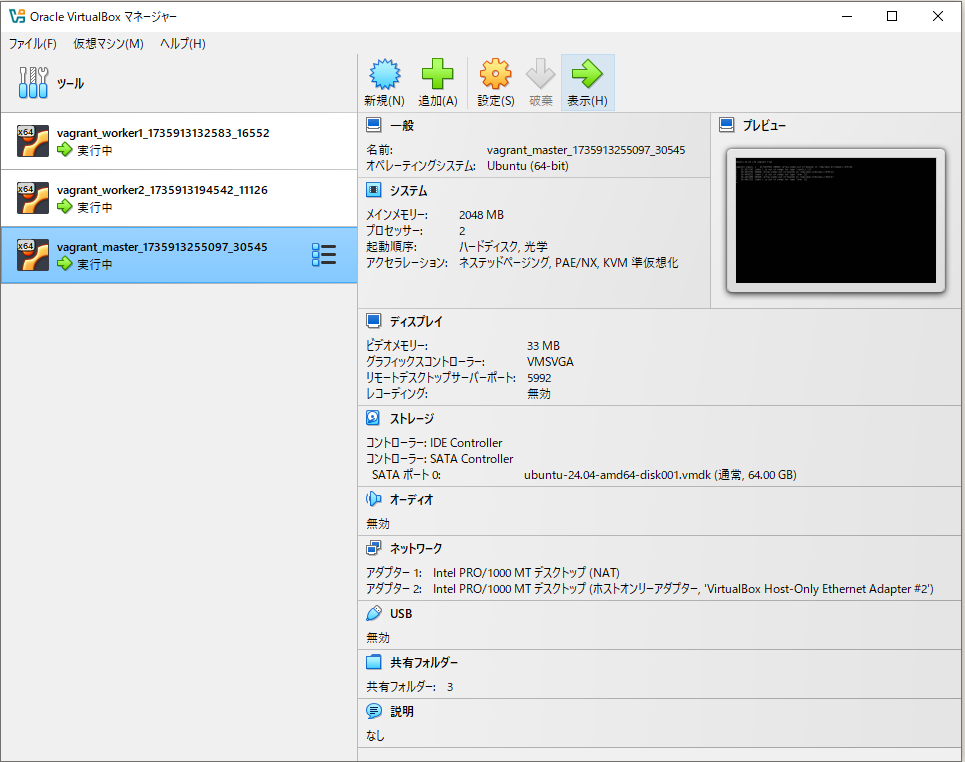

で、一応、仮想マシン自体は動作してる。

ただ、処理がスキップされてたりするから、Ansibleのplaybookの設定が間違っているのってことなんですかね...

エラーになってましたわ...

休日が潰れてただけという不毛な時間を浪費してしまったという...

何も得るものがなく、徒労感しかないんだが...

2025年1月4日(土)追記:↓ ここから

とりあえず、

⇧ ログを出力することにしました。

■D:\work-soft\vagrant\Vagrantfile

Vagrant.configure("2") do |config|

# 仮想マシンのOS

config.vm.box = "bento/ubuntu-24.04"

config.vm.box_version = "202404.26.0"

# タイムアウトの増加

config.vm.boot_timeout = 900

# common ssh-private-key

config.ssh.insert_key = false

config.ssh.private_key_path = "C:/Users/toshinobu/.vagrant.d/insecure_private_key"

# copy to private-key

config.vm.provision "file", source: "C:/Users/toshinobu/.vagrant.d/insecure_private_key", destination: "/home/vagrant/.ssh/id_rsa"

config.vm.provision "shell", privileged: false, inline: <<-SHELL

chmod 600 /home/vagrant/.ssh/id_rsa

SHELL

# ワーカーノード1の設定

config.vm.define "worker1" do |worker1|

# 仮想マシンのプロバイダ設定

worker1.vm.provider "virtualbox" do |vb|

vb.memory = "1024"

vb.cpus = 1

end

worker1.vm.network "private_network", type: "static", ip: "192.168.50.11"

worker1.vm.hostname = "worker1"

# フォルダの同期(パーミッション設定を含む)

worker1.vm.synced_folder "./ansible", "/home/vagrant/ansible", type: "virtualbox", create: true, mount_options: ["dmode=775", "fmode=664"]

worker1.vm.synced_folder "./k8s-manifests", "/home/vagrant/k8s-manifests", type: "virtualbox", create: true, mount_options: ["dmode=775", "fmode=664"]

# # SSHキーの受け取り

# worker1.vm.provision "shell", privileged: true, inline: <<-SHELL

# mkdir -p /home/vagrant/.ssh

# chmod 700 /home/vagrant/.ssh

# [ ! -f /home/vagrant/.ssh/authorized_keys ] && touch /home/vagrant/.ssh/authorized_keys

# chmod 600 /home/vagrant/.ssh/authorized_keys

# SHELL

end

# ワーカーノード2の設定

config.vm.define "worker2" do |worker2|

# 仮想マシンのプロバイダ設定

worker2.vm.provider "virtualbox" do |vb|

vb.memory = "1024"

vb.cpus = 1

end

worker2.vm.network "private_network", type: "static", ip: "192.168.50.12"

worker2.vm.hostname = "worker2"

# フォルダの同期(パーミッション設定を含む)

worker2.vm.synced_folder "./ansible", "/home/vagrant/ansible", type: "virtualbox", create: true, mount_options: ["dmode=775", "fmode=664"]

worker2.vm.synced_folder "./k8s-manifests", "/home/vagrant/k8s-manifests", type: "virtualbox", create: true, mount_options: ["dmode=775", "fmode=664"]

worker2.vm.provision "file", source: "./docker-compose.yml", destination: "/home/vagrant/docker-compose/docker-compose.yml"

# # SSHキーの受け取り

# worker2.vm.provision "shell", privileged: true, inline: <<-SHELL

# mkdir -p /home/vagrant/.ssh

# chmod 700 /home/vagrant/.ssh

# [ ! -f /home/vagrant/.ssh/authorized_keys ] && touch /home/vagrant/.ssh/authorized_keys

# chmod 600 /home/vagrant/.ssh/authorized_keys

# SHELL

end

# マスターノードの設定

config.vm.define "master" do |master|

# 仮想マシンのプロバイダ設定

master.vm.provider "virtualbox" do |vb|

vb.memory = "2048"

vb.cpus = 2

end

master.vm.network "private_network", type: "static", ip: "192.168.50.10"

master.vm.hostname = "master"

# フォルダの同期(パーミッション設定を含む)

master.vm.synced_folder "./ansible", "/home/vagrant/ansible", type: "virtualbox", create: true, mount_options: ["dmode=775", "fmode=664"]

master.vm.synced_folder "./k8s-manifests", "/home/vagrant/k8s-manifests", type: "virtualbox", create: true, mount_options: ["dmode=775", "fmode=664"]

master.vm.provision "shell", privileged: true, inline: <<-SHELL

# # Ansibleの対象マシンに接続するためのSSHキー生成

# ssh-keygen -t rsa -b 2048 -f /home/vagrant/.ssh/id_rsa -q -N ""

# Ansible設定(ホスト鍵検証無効化)

echo "[defaults]" > /home/vagrant/ansible/ansible.cfg

echo "host_key_checking = False" >> /home/vagrant/ansible/ansible.cfg

sudo chmod -R 755 /home/vagrant/ansible

sudo apt-get install -y sshpass ansible bash-completion

# # Ansibleの対象マシンに公開鍵を登録する

# for ip in 192.168.50.11 192.168.50.12; do

# sshpass -p "vagrant" ssh-copy-id -i /home/vagrant/.ssh/id_rsa.pub -o StrictHostKeyChecking=no vagrant@$ip

# done

cd /home/vagrant/ansible

# Ansibleのplaybookを実行する

ansible-playbook -i inventory.ini k8s-setup.yml -u vagrant -e 'ansible_python_interpreter=/usr/bin/python3' -vvv | tee /home/vagrant/ansible/ansible-playbook.log

SHELL

end

end

AnsibleのPlaybookのkubeadmの方も、

⇧ 上記の情報を元に追記してみたが、

■D:\work-soft\vagrant\ansible\k8s-setup.yml

---

- hosts: all

become: yes

tasks:

- name: Install required packages

apt:

name:

- apt-transport-https

- ca-certificates

- curl

- gpg

state: present

update_cache: yes

- name: Download Google Cloud public signing key

shell: curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.32/deb/Release.key | gpg --dearmor -o /usr/share/keyrings/kubernetes-archive-keyring.gpg

- name: Add Kubernetes apt repository

shell: echo 'deb [signed-by=/usr/share/keyrings/kubernetes-archive-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.32/deb/ /' | tee /etc/apt/sources.list.d/kubernetes.list

- name: Update apt package index

apt:

update_cache: yes

- name: Install Docker

apt:

name: docker.io

state: present

update_cache: yes

- name: Install Kubernetes packages

apt:

name:

- kubeadm

- kubelet

- kubectl

state: present

update_cache: yes

- name: Hold Kubernetes packages

command: apt-mark hold kubeadm kubelet kubectl

- name: Start Docker service

service:

name: docker

state: started

enabled: true

- name: Start kubelet service

systemd:

name: kubelet

enabled: yes

state: started

- name: Disable swap

shell: |

swapoff -a

sed -i '/swap/d' /etc/fstab

- hosts: master

become: yes

tasks:

- name: Pull required images for Kubernetes

shell: kubeadm config images pull

- name: Initialize Kubernetes master node

shell: >

kubeadm init

--pod-network-cidr=10.244.0.0/16

--apiserver-advertise-address=192.168.50.10

--image-repository=registry.k8s.io

--kubernetes-version=stable-1

--cri-socket=unix:///var/run/containerd/containerd.sock

register: kubeadm_output

ignore_errors: yes

- name: Print kubeadm init logs for debugging

debug:

var: kubeadm_output.stderr_lines

- name: Save the kubeadm join command for workers

set_fact:

join_command: "{{ kubeadm_output.stdout | regex_search('kubeadm join .*') }}"

- name: Set up kubeconfig for kubectl

shell: |

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

when: kubeadm_output.rc == 0

- name: Check if admin.conf exists

stat:

path: /etc/kubernetes/admin.conf

register: admin_conf

- name: Fail if admin.conf does not exist

fail:

msg: "admin.conf does not exist"

when: not admin_conf.stat.exists

- name: Configure kubectl to access the cluster

shell: |

export KUBECONFIG=/etc/kubernetes/admin.conf

when: kubeadm_output.rc == 0

- name: Wait for Kubernetes API server to be ready

shell: |

until kubectl get nodes; do sleep 10; done

when: kubeadm_output.rc == 0

- name: Apply Flannel CNI plugin

shell: |

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

when: kubeadm_output.rc == 0

- hosts: workers

become: yes

tasks:

- name: Test SSH connection to master node

shell: |

ssh -o StrictHostKeyChecking=no -i /home/vagrant/.ssh/id_rsa root@192.168.50.10 echo "SSH connection established"

- name: Copy admin.conf from master node

shell: |

scp -o StrictHostKeyChecking=no -o ConnectTimeout=30 -i /home/vagrant/.ssh/id_rsa root@192.168.50.10:/etc/kubernetes/admin.conf /home/vagrant/admin.conf

- name: Proxy API Server to localhost

shell: |

kubectl --kubeconfig /home/vagrant/admin.conf proxy

- name: Join Kubernetes cluster

shell: |

export node_ip={{ ansible_host }} && {{ hostvars['master'].join_command }}

when: hostvars['master'].join_command is defined

- name: Generate AWX and Squid Pods on worker1

shell: |

kubectl apply -f /home/vagrant/k8s-manifests/awx-deployment.yml

kubectl apply -f /home/vagrant/k8s-manifests/squid-deployment.yml

when: inventory_hostname == 'worker1'

- name: Install Docker Compose on worker2

shell: |

curl -L "https://github.com/docker/compose/releases/download/$(curl -s https://api.github.com/repos/docker/compose/releases/latest | grep tag_name | cut -d '\"' -f 4)/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose

chmod +x /usr/local/bin/docker-compose

when: inventory_hostname == 'worker2'

- name: Set up Docker Compose on worker2

shell: |

cd /home/vagrant/docker-compose

docker-compose up -d

when: inventory_hostname == 'worker2'

⇧ エラーが解消されていない状態...

ログを確認しつつ、対応していく感じになりますか...

Kubernetes導入の公式のドキュメントが分り辛過ぎるんよね...

2025年1月4日(土)追記:↑ ここまで

2025年1月9日(木)追記:↓ ここから

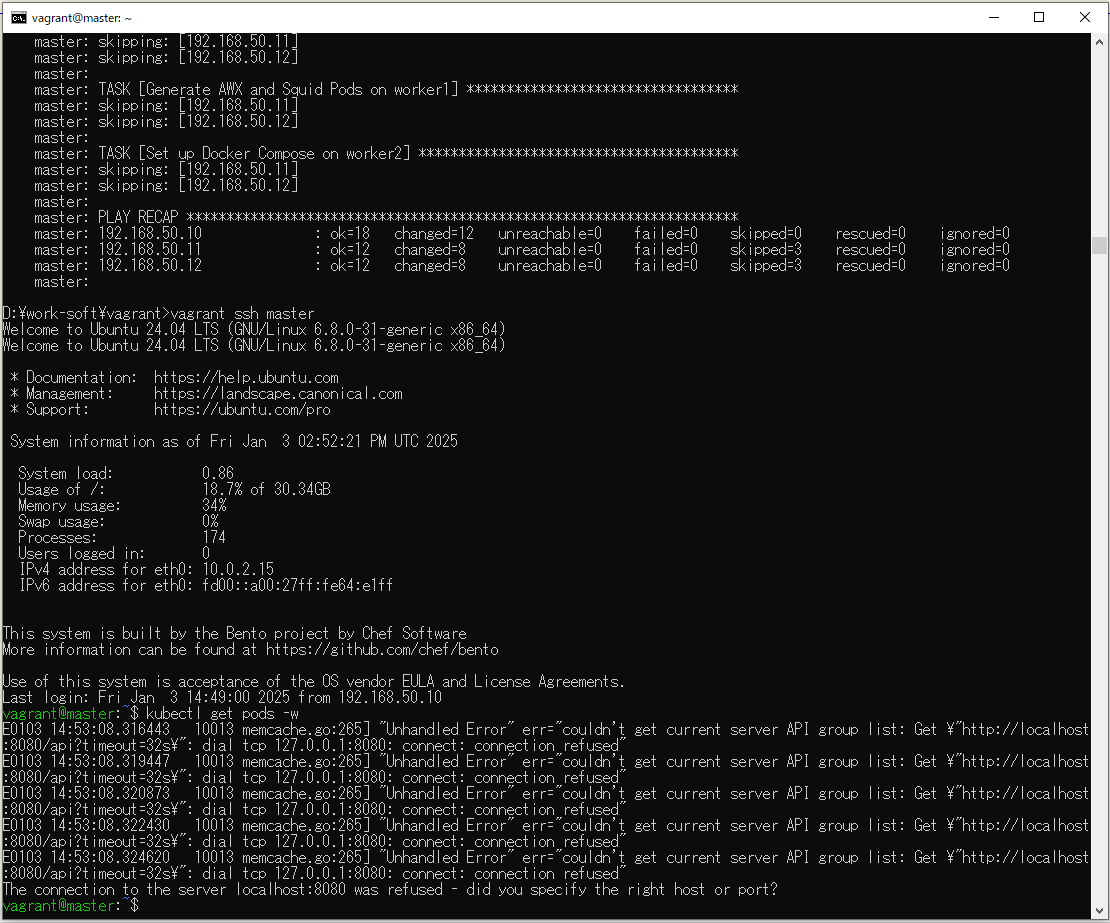

とりあえず、

⇧「Kubernetes」の環境は構築できたっぽい。

「Pod」が動いているかは未確認。

とりあえず、「Ansible」とかに至っては、一次情報である公式のドキュメントがほぼ役に立たないというね...

2025年1月9日(木)追記:↑ ここまで

毎度モヤモヤ感が半端ない…

今回はこのへんで。